Ubuntu 20.04

containerd 를 kubernetes cri로 사용

helm, prometheus, grafana 사용

1. nvidia-container-toolkit 설치 (master node, worker node 모두 작업)

distribution=$(. /etc/os-release;echo $ID$VERSION_ID)

curl -s -L https://nvidia.github.io/libnvidia-container/gpgkey | sudo apt-key add -

curl -s -L https://nvidia.github.io/libnvidia-container/$distribution/libnvidia-container.list | sudo tee /etc/apt/sources.list.d/libnvidia-container.list

sudo apt-get update && sudo apt-get install -y nvidia-container-toolkit

이후 reboot

2. nvidia-container-runtime을 containerd의 default runtime으로 등록 (master node, worker node 모두 작업)

(https://github.com/NVIDIA/k8s-device-plugin 참고)

# /etc/containerd/config.toml 에서 수정

# 대괄호로 묶인 레벨을 찾아서 하위에 추가

[plugins]

[plugins."io.containerd.grpc.v1.cri"]

[plugins."io.containerd.grpc.v1.cri".containerd]

default_runtime_name = "nvidia"

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes]

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.nvidia]

privileged_without_host_devices = false

runtime_engine = ""

runtime_root = ""

runtime_type = "io.containerd.runc.v2"

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.nvidia.options]

BinaryName = "/usr/bin/nvidia-container-runtime"

3. containerd 재시작 (master node, worker node 모두 작업)

sudo systemctl restart containerd

4. 쿠버네티스에 k8s-device-plugin 배포 (master node 작업)

kubectl create -f https://raw.githubusercontent.com/NVIDIA/k8s-device-plugin/v0.13.0/nvidia-device-plugin.yml

5. prometheus 세팅 및 배포 (master node 작업)

# helm repository 추가 및 업데이트

helm repo add prometheus-community \

https://prometheus-community.github.io/helm-charts

helm repo update

# install

helm install prometheus-community/kube-prometheus-stack \

--create-namespace --namespace prometheus \

--generate-name \

--set prometheus.service.type=NodePort \

--set prometheus.prometheusSpec.serviceMonitorSelectorNilUsesHelmValues=false

6. DCGM 세팅 (master node 작업)

# helm repository 추가 및 업데이트

helm repo add gpu-helm-charts \

https://nvidia.github.io/dcgm-exporter/helm-charts

helm repo update

# install

helm install \

--generate-name \

gpu-helm-charts/dcgm-exporter

주의할점

21년 이전 가이드를 보면 helm repository의 주소가 아래처럼 gpu-monitoring-tools 로 설명하는데,

metric 수집이 안되는 에러가 발생하므로 주의

helm repo add gpu-helm-charts \

https://nvidia.github.io/gpu-monitoring-tools/helm-charts

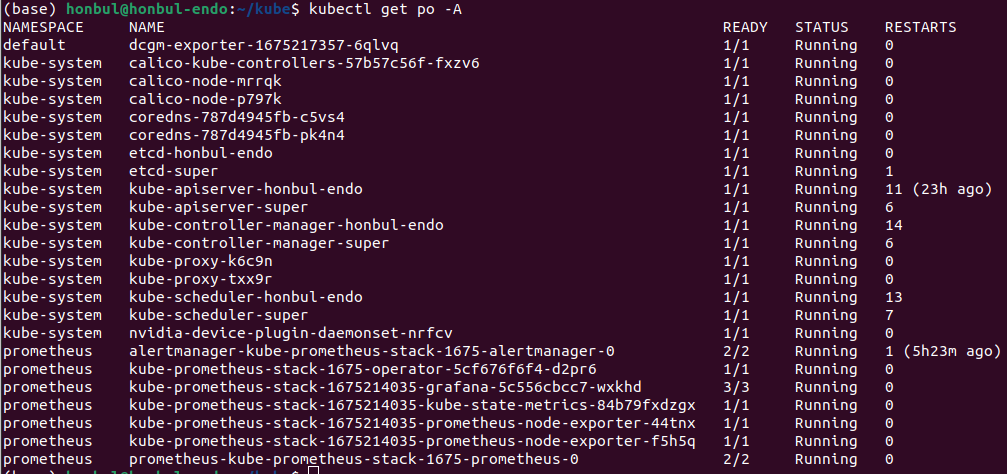

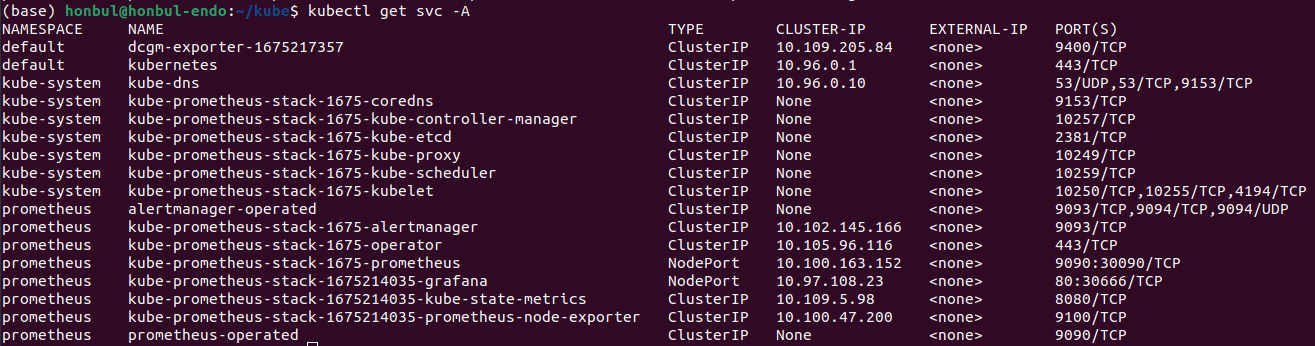

7. Pod 및 service 확인

# pod

kubectl get po -A

# svc

kubectl get svc -A

output:

8. grafana dashboard 확인

8-1. grafana dashboard ip 노출 타입을 nodeport로 변경

kubectl patch svc kube-prometheus-stack-1608276926-grafana -n prometheus -p '{ "spec": { "type": "NodePort" } }'

8-2. 노출 포트 확인

kubectl get svc -n prometheus

8-3. 접속

prometheus가 배포된 node의 'ip주소:포트' 로 접속

초기 id/password는 admin/prom-operator

8-4. nvidia 공식 DCGM-exporter dashboard

https://grafana.com/grafana/dashboards/12239-nvidia-dcgm-exporter-dashboard/

NVIDIA DCGM Exporter Dashboard | Grafana Labs

Edit Delete Confirm Cancel

grafana.com

해결한 에러 정리

1. dcgm-exporter 의 crashloopbackoff 상태 에러

- kubernetes cri에 nvidia-container-runtime이 잘 설정되었는지 확인

2. dcgm-exporter가 running은 하나 prometheus/grafana에 메트릭이 연결 안된 경우

- helm으로 설치한 prometheus에 dcgm-exporter를 git으로 설치하면 metric 수집이 안됨

- helm으로 dcgm-exporter를 설치 (repository 주의)

새로운 gpu monitoring node가 추가될 경우

nvidia-container-runtime 설치 - 재부팅 - containerd 설치 - containerd nvidia 세팅 - kubernetes 설치 및 cluster join

순으로 진행

참고한 글

https://github.com/NVIDIA/k8s-device-plugin

GitHub - NVIDIA/k8s-device-plugin: NVIDIA device plugin for Kubernetes

NVIDIA device plugin for Kubernetes. Contribute to NVIDIA/k8s-device-plugin development by creating an account on GitHub.

github.com

https://docs.nvidia.com/datacenter/cloud-native/container-toolkit/install-guide.html#id3

Installation Guide — NVIDIA Cloud Native Technologies documentation

On RHEL 7, install the nvidia-container-toolkit package (and dependencies) after updating the package listing: Restart the Docker daemon to complete the installation after setting the default runtime: Note Depending on how your RHEL 7 system is configured

docs.nvidia.com

https://github.com/NVIDIA/dcgm-exporter

GitHub - NVIDIA/dcgm-exporter: NVIDIA GPU metrics exporter for Prometheus leveraging DCGM

NVIDIA GPU metrics exporter for Prometheus leveraging DCGM - GitHub - NVIDIA/dcgm-exporter: NVIDIA GPU metrics exporter for Prometheus leveraging DCGM

github.com

https://docs.nvidia.com/datacenter/cloud-native/gpu-telemetry/dcgm-exporter.html

DCGM-Exporter — NVIDIA Cloud Native Technologies documentation

In this scenario the DCGM nv-hostengine runs in a separate container on the same host making its client port available to DCGM-Exporter as well as dcgmi client commands. Warning Similar to the warning when connecting to an existing DCGM agent, the dcgm-exp

docs.nvidia.com

GPU Monitor

2020.12.23 1. GPU Monitor - Prometheus Prometheus is deployed along with kube-state-metrics and node_exporter to expose cluster-level metrics for Kubernetes API objects and node-level metrics such as CPU utilization - DCGM-Exporter (https://github.com/NVID

1week.tistory.com

[kubernetes] k3s에서 Nvidia GPU사용하기

\[Image From: https://developer.nvidia.com/blog/announcing-containerd-support-for-the-nvidia-gpu-operator/] 필자의 k3s 클러스터에는 상당히 오래된 gtx 1050 라는 nv

velog.io

https://github.com/NVIDIA/gpu-operator

GitHub - NVIDIA/gpu-operator: NVIDIA GPU Operator creates/configures/manages GPUs atop Kubernetes

NVIDIA GPU Operator creates/configures/manages GPUs atop Kubernetes - GitHub - NVIDIA/gpu-operator: NVIDIA GPU Operator creates/configures/manages GPUs atop Kubernetes

github.com

Getting Started — NVIDIA Cloud Native Technologies documentation

NVIDIA DGX systems running with DGX OS bundles drivers, DCGM, etc. in the system image and have nv-hostengine running already. To avoid any compatibility issues, it is recommended to have dcgm-exporter connect to the existing nv-hostengine daemon to gather

docs.nvidia.com

https://grafana.com/grafana/dashboards/15117-nvidia-dcgm-exporter/

'컴퓨터 > 클라우드 (Cloud)' 카테고리의 다른 글

| kubernetes, helm, gpu monitoring 명령어 정리 (0) | 2023.02.06 |

|---|---|

| Ubuntu, Kubernetes dashboard 정리 (0) | 2023.01.22 |

| Ubuntu, Kubernetes Metallb 설치 정리 (0) | 2022.12.31 |

| Ubuntu, Kubernetes Cluster 구성 정리 (0) | 2022.12.31 |

| Docker 명령어 정리 (2) | 2022.12.27 |